26. Januar 2019

Running multiple NST / BCST instances connected to the same database with Docker compose

I recently encountered a somewhat unexpected behavior of the NAV / Business Central Service Tier when running multiple instances connected to the same database. It sometimes happens that our heavily code customized solution behaves differently than a standard Cronus database, so I decided to try to repro in a standard out-of-the-box environment. Of course I could do this by just starting multiple instances (in containers or not) against a standard database but I expected to do this multiple times and as I really don’t like to do something manually multiple times, I decided to automate it. Again, there are multiple options but the easiest way to me (and the most natural when you start to think about IT problems the Docker way) was using Docker Compose and here is what I did:

The TL;DR

I didn’t have Cronus database files readily available, so I did two things:

- Extract the .mdf and .ldf (data and log file) from a standard BC container using the mechanism explained here. It uses a volume to store the database files, so you can easily retrieve them on your host.

- Start a SQL container with the database files as arguments, so they are attached and a new database is created. Then create 3 BC containers without a SQL server, just connecting them to the database. I had to add a script to take care of the encryption key, so that stored passwords are readable from all containers, but apart from that it is very straight forward

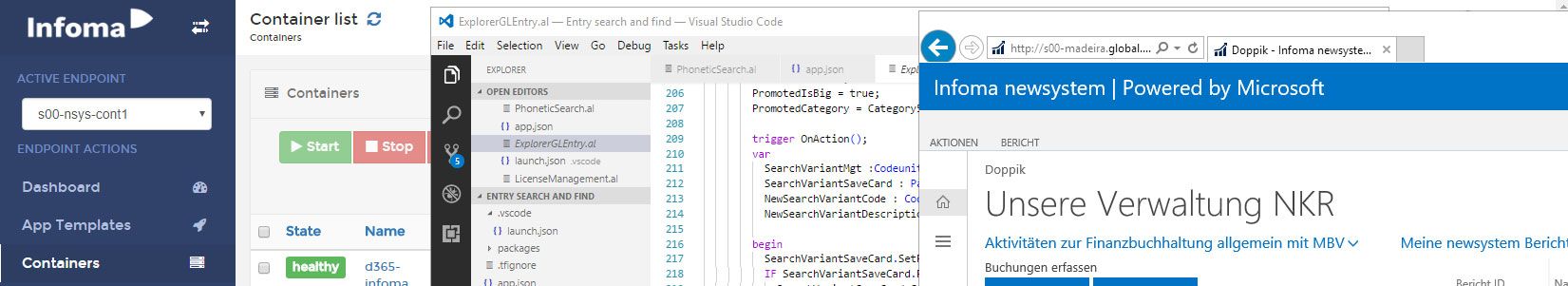

In the end it is a first docker-compose up run to get the database and then subsequent docker-compose up / docker-compose down runs whenever you want to create and delete all four containers. Here is what it looks like to go from „nothing running“ to 1 SQL and 3 BC containers:

Preparation: Create folders and download scripts

To get this up and running you need to do the following:

- If you don’t have Docker Compose installed (it comes automatically with a Docker Desktop install on Windows 10, but not with a Docker install on Windows Server), do that by following the instructions as described here, tab „Windows“. The instructions for Windows Server 2016 also work on 2019.

- Create folders c:\data and c:\data\databases

- Download https://github.com/tfenster/nav-docker-samples/raw/multi-instance-with-compose/docker-compose.initial.yml and https://github.com/tfenster/nav-docker-samples/raw/multi-instance-with-compose/docker-compose.yml and put them in folder c:\data

- Open a PowerShell in c:\data

Step 1: Get the database files

I spoke about this at the Dutch Dynamics Community in January 2018 but haven’t really blogged about it: I have a small script as part of my nav-docker-samples Github repo which will move the database files for the standard Cronus database provided by any NAV/BC image to a volume on your host. If you run docker-compose -f .\docker-compose.initial.yml up in the PowerShell session you opened above, it will create a new container and do all the standard things with one exception: When you see Move database to volume in the logs, it uses my script to create a folder c:\data\databases\CRONUS and place the database files there (if you are interested in the code, you can find it here). After that, just wait for a few seconds until it has finished and then press Ctrl+C to end the container again. When it has stopped, run docker-compose -f .\docker-compose.initial.yml down to remove the container again.

Step 2: Create the multi-instance environment

The multi-instance environment has 1 SQL server and 3 NAV servers. It is described in the docker-compose.yml you have downloaded previously:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | version: '3.3'networks: default: external: name: natservices: sql: image: microsoft/mssql-server-windows-express environment: attach_dbs: "[{'dbName':'cronus','dbFiles':['C:\\\\databases_vol\\\\Demo Database NAV (13-0)_Data.mdf','C:\\\\databases_vol\\\\Demo Database NAV (13-0)_Log.ldf']}]" ACCEPT_EULA: Y sa_password: SuperSA5ecret! volumes: - source: 'C:\data\database\CRONUS\' target: 'C:\databases_vol\' type: bind nav: image: mcr.microsoft.com/businesscentral/onprem:cu3-w1 environment: - accept_eula=y - usessl=n - encryptionPassword=SuperEnc5ecret! - databaseUserName=sa - databasePassword=SuperSA5ecret! - databaseServer=sql - databaseInstance=SQLEXPRESS - databaseName=cronus - folders=c:\run\my=https://github.com/tfenster/nav-docker-samples/archive/multi-instance-with-compose.zip\nav-docker-samples-multi-instance-with-compose volumes: - source: 'C:\data\' target: 'C:\key\' type: bind |

Service sql (line 10ff) creates the SQL server and attaches the .mdf and .ldf files (line 13) from the volume where we have stored them (line 17-19). This is something the SQL images can do out of the box. We also need to accept the EULA (line 14, I bet you recognize that) and set a password for the sa user (line 15).

Service nav creates a BC OnPrem CU3 W1 container connected to the cronus database on container sql, instance SQLEXPRESS, using the sa user defined above (lines 27-31). Also note that it has a volume (lines 34-36) for the encryption key, as we need to share it between our instances. Because of line 32, when we run that container, with docker-compose up, it calls the SetupDatabase.ps1 file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | if ($restartingInstance) { # Nothing to do} else { $keyPath = 'c:\key\' $MyEncryptionKeyFile = Join-Path $keyPath 'DynamicsNAV.key' $LockFileName = 'WaitForKey' $LockFile = Join-Path $keyPath $LockFileName if (!(Test-Path $MyEncryptionKeyFile -PathType Leaf)) { Write-Host "No encryption key" $rnd = (Get-Random -Maximum 50) * 100 Write-Host "Waiting for $rnd milliseconds" Start-Sleep -Milliseconds $rnd if (!(Test-Path $LockFile -PathType Leaf)) { New-Item -Path $keyPath -Name $LockFileName -ItemType 'file' | Out-Null Write-Host "Got the lock" # invoke default . (Join-Path $runPath $MyInvocation.MyCommand.Name) Copy-Item (Join-Path $myPath 'DynamicsNAV.key') $keyPath Remove-Item $LockFile Write-Host "Removed the lock" } else { do { Write-Host "Waiting to become unlocked" Start-Sleep -Seconds 10 } while (Test-Path $LockFile -PathType Leaf) Write-Host "Unlocked" Copy-Item $MyEncryptionKeyFile $myPath # invoke default . (Join-Path $runPath $MyInvocation.MyCommand.Name) } } else { Write-Host "Found an encryption key" Copy-Item $MyEncryptionKeyFile $myPath # invoke default . (Join-Path $runPath $MyInvocation.MyCommand.Name) }} |

It first tries to find an encryption key file (line 9) and if it doesn’t find one, it calls the standard script (line 19) to create one. To avoid any problems where containers might try to create keys concurrently, I added some random waiting (lines 11-13) and a lock file (line 15), so that whichever container gets there first, creates the lock file which should be very quick and then creates the encryption key which takes a couple of seconds. Afterwards it copies the key to the volume to share it with other containers (line 21) and removes the lock file (line 22). The other containers check every 10 seconds until the lock file disappears (lines 25-28) and then copy the shared key to the path where the standard container scripts expect it (line 30) and call the standard script (line 33). If a key already exists on startup, it just copies it over and calls the standard script (lines 37-40).

So if we call docker-compose up --scale nav=3, we tell it to create 3 BC container instances1. They are called nav_1, nav_2 and nav_3 and get to the point of the random wait at almost the same time (lines 10-12 in the log below). The container with the shortest wait creates the lock file and then the encryption key (lines 13 and 15). The others wait (lines 14 and 16) until it has removed the lock file (line 17) and then go on (lines 21 and 24). In the compose log it will look similar to this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | nav_2 | Using NavUserPassword Authenticationnav_3 | Using NavUserPassword Authenticationnav_1 | Using NavUserPassword Authenticationnav_3 | Starting Internet Information Servernav_2 | Starting Internet Information Servernav_1 | Starting Internet Information Servernav_3 | No encryption keynav_1 | No encryption keynav_2 | No encryption keynav_3 | Waiting for 1400 millisecondsnav_1 | Waiting for 3900 millisecondsnav_2 | Waiting for 100 millisecondsnav_2 | Got the locknav_3 | Waiting to become unlockednav_2 | Import Encryption Keynav_1 | Waiting to become unlockednav_2 | Removed the locknav_2 | Creating Self Signed Certificatenav_2 | Self Signed Certificate Thumbprint BA639C8F8570895B2922012BC41237E0B09AE3ECnav_2 | Modifying Service Tier Config File with Instance Specific Settingsnav_3 | Unlockednav_2 | Starting Service Tiernav_3 | Import Encryption Keynav_1 | Unlockednav_1 | Import Encryption Keynav_3 | Creating Self Signed Certificate |

With that it should be as easy as two commands to get a multi-instance environment connected to a SQL container up and running in only a few minutes. If you happen to already have the .mdf and .ldf or a database on some SQL server available, it will be even quicker, as you would only need step 2 in that case

- If we were using Windows auth with a gMSA, we wouldn’t need to worry about the encryption key and it would just works