29. August 2018

Bringing it together: Windows auth in a NAV/BC image in a Docker Swarm

I explained here that you can easily use gMSAs in a Docker Swarm to enable Windows authentication. I have only used a Windows Server Core image to show that it works, but of course the goal for me is running a NAV/BC image with a Windows authenticated WebClient in a Docker Swarm. If you are not that interested in NAV/BC specifically, this should also be relevant as the same is true for any http(s)-based Windows authentication.

The TL;DR

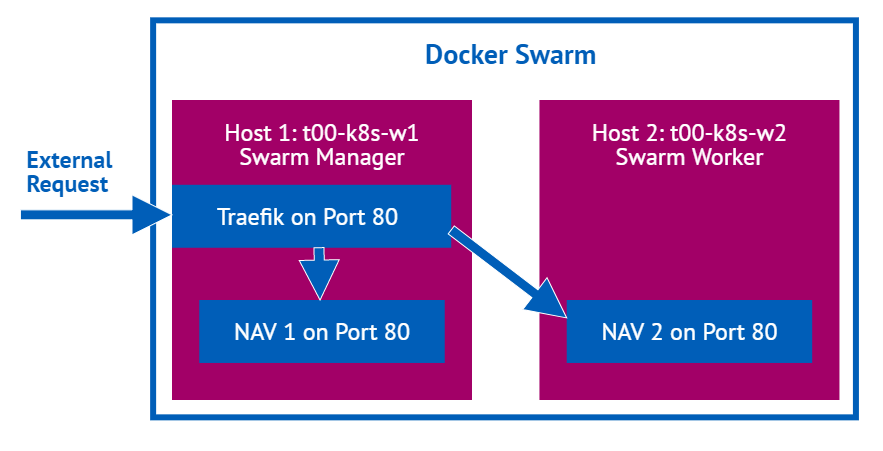

If you want to scale your NAV/BC containers, you still need to make sure that users only have one endpoint to connect to. We solve this with something called a reverse proxy1, in my case traefik, which basically takes a user request and delivers it to one of many endpoints in the backend. In the end we have a Docker Swarm with two hosts and an arbitrary number of NAV/BC containers on any of those with a Traefik Container sitting in front which delivers the external requests to one of the containers. If load increases we can dynamically add additional containers, eventually also on additional hosts. And everything with Windows authentication 🙂

The details, part one: A Docker Swarm with Traefik as reverse proxy

As I already mentioned I am using traefik which has become quite popular and has very convenient Docker Swarm support but others are possible as well. Please note that the following steps are very much what Traefik describes in their Swarm guide and what Docker Captain, Microsoft MVP and extraordinary community contributor Stefan Scherer has described in the GitHub repo for his Traefik on Windows image. Mostly for this part I am bringing together what you can find in those two sources. I assume that you have a Docker Swarm up and running as described in my previous post. Now, what do we need to do:

- First we create an overlay network which makes sure that containers on all Swarm connected Docker hosts can communicate without being exposed to the outside world. We do this with the following command:

docker network create --driver=overlay traefik-net

Please note that this has to be done on the Swarm Manager. The network will be visible on the manager if you run

docker network lsbut not on the worker. However the first service that gets deployed to a worker creates that network, if it doesn’t already exist - The first service we create is Traefik as all external requests will go through it. The command for creating this service is

docker service create --name traefik --detach=false --constraint=node.role==manager --publish published=80,target=80,mode=host --publish published=8080,target=8080,mode=host --network traefik-net stefanscherer/traefik-windows --docker --docker.swarmMode --docker.domain=axians-infoma.de --docker.watch --api --docker.endpoint=tcp://10.111.77.51:2375

A couple of things to note here: Traefik needs to run on a Swarm manager, so we add

--constraint=node.role==manager. We publish port 80 on that host as ingress networking is not available on Windows and we want to use axians-infoma.de as domain, so we add--docker.domain=axians-infoma.de. Obviously you will use something different, also for--docker.endpoint=tcp://10.111.77.51:2375which is the Docker endpoint where the manager is running. The result of this command after a while should be something likemv35vzvxysubq7le2lh536y15 overall progress: 1 out of 1 tasks 1/1: running [==================================================>] verify: Waiting 1 seconds to verify that tasks are stable...

Update: After tweeting about this post I was told by @traefik that they actually have an official Windows image. So instead of the command above the following also works fine:

docker service create --name traefik --detach=false --constraint=node.role==manager --publish published=80,target=80,mode=host --publish published=8080,target=8080,mode=host --network traefik-net traefik:v1.6.6-nanoserver --docker --docker.swarmMode --docker.domain=axians-infoma.de --docker.watch --api --docker.endpoint=tcp://10.111.77.51:2375

- Our second service is a simple WebServer called whoami which just returns the container id so that we know on which container the request ended up. Again we are using Stefan Scherer’s Docker image for this:

docker service create --name whoami --detach=false --label traefik.port=8080 --label traefik.backend.loadbalancer.sticky=true --network traefik-net stefanscherer/whoami

Traefik automatically picks up new services and analyzes their labels, so it knows that we want to talk to port 8080 (

--label traefik.port=8080) and it also knows that we want sticky sessions (--label traefik.backend.loadbalancer.sticky=true), which means that a given client will always get routed to the same instance (the correct name in a Swarm would be “task”) and is very important later for NAV/BC. The result will be something like thishm4kbn1loyz4yytgdfel2azme overall progress: 1 out of 1 tasks 1/1: running [==================================================>] verify: Waiting 1 seconds to verify that tasks are stable...

- However one instance isn’t really fun in a Swarm, so let’s scale this a bit with

docker service scale whoami=4

The result will be

whoami scaled to 4

With

docker service pswe can check where our instances ended up and what their state is. So we calldocker service ps whoami

and get

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS yw0x9y2qvopr whoami.1 stefanscherer/whoami:latest t00-k8s-w2 Running Running 40 seconds ago q6xgqjqlt60d whoami.2 stefanscherer/whoami:latest t00-k8s-w1 Running Running 1 second ago g53irhw4gkhu whoami.3 stefanscherer/whoami:latest t00-k8s-w1 Running Running 1 second ago og1fuve3zunj whoami.4 stefanscherer/whoami:latest t00-k8s-w2 Running Running 8 seconds ago

Pretty fine, we have 4 instances or “tasks” running two each on our hosts or “nodes”.

- The IP address is not yet configured to any name, so we’ll just use the IP address. Traefik will however analyze the Host header to know what service we want to get, so using curl2 we query the IP address but add the correct host header and with that we get answers from our four instances. Note that the fifth gets the same answer as the first, the sixth the same as the second and it would go on as the load balancing is simple round robin

tfenster@DE00INDE044L1:~$ curl --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm 9f975035b533 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm daaeb26288a2 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm dc1f42b87cf8 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm eb0236acc579 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm 9f975035b533 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm daaeb26288a2 running on windows/amd64

- You might remember that I said the same client would always get the same instance, but now we seem to be rotating. This is because Traefik uses a cookie to track which backend to serve, which works well in a browser but needs an additional param in curl. We use

-c cookies.txtto store the response cookie for the first request and send it with-b cookies.txton all subsequent request. Now we always get the same instancetfenster@DE00INDE044L1:~$ curl -c cookies.txt --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm 9f975035b533 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl -b cookies.txt --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm 9f975035b533 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl -b cookies.txt --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm 9f975035b533 running on windows/amd64 tfenster@DE00INDE044L1:~$ curl -b cookies.txt --header 'Host: whoami.axians-infoma.de' 'http://10.111.77.51:80/' I'm 9f975035b533 running on windows/amd64

Ok, now we know that Traefik works with a Docker Swarm on Windows. Good to know but actually we wanted Windows authentication in NAV/BC. So let’s tackle that as well

The details, part two: NAV/BC with Windows authentication in a Docker Swarm behind a Traefik reverse proxy3

Here’s what we need to do:

- As a prereq make sure that whatever you plan to use as name for your service is resolvable to the IP address of you manager node. In my case the service will be tst19, the traefik domain was configured as axians-infoma.de and my manager has the IP 10.111.77.51, so I have a DNS entry that maps tst19.axians-infoma.de to 10.111.77.51. If you only want it to work on one machine, create an entry in your hosts file. I have also restored a Cronus 2018 CU 8 W1 demo database on an external SQL Server (no container, plain old school SQL installed in a VM) and added my gMSA tst19$ as db_owner.

- Now it is pretty easy to create our service, we only need a couple of params to make sure the NAV image works as expected and Swarm and Traefik do what we want them to do:

docker service create --name tst19 --detach=false --health-start-period 300s --label traefik.port=80 --label traefik.backend.loadbalancer.sticky=true --network traefik-net -e accept_eula=y -e auth=Windows -e databaseServer=s00-nsyssql-rmt.global.fum -e databaseInstance=SQL2016 -e databaseName=Cronus2018CU8W1 --credential-spec file://tst19.json --hostname tst19 microsoft/dynamics-nav:2018-cu8-w1

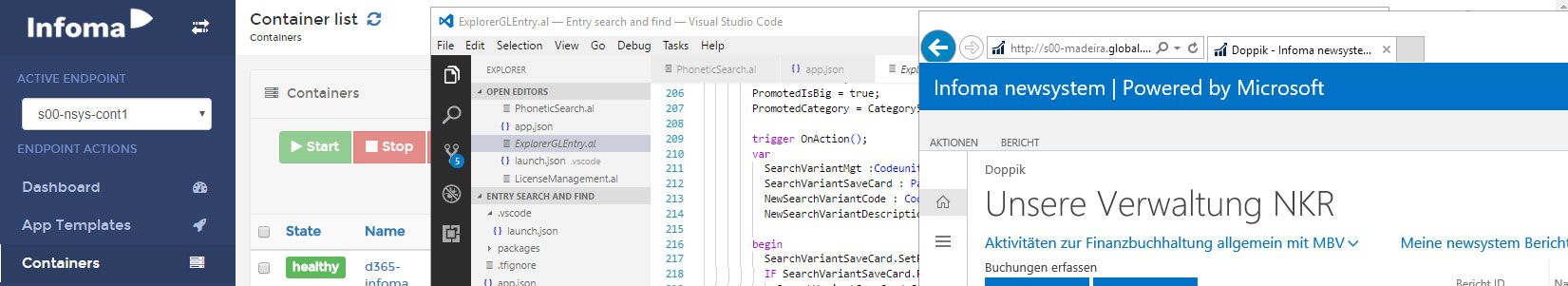

If you have followed my previous post and know a bit about the NAV docker image, you will see what were are doing here is create a Swarm service, tell Traefik to use port 80 and have sticky sessions and tell NAV to connect to an external SQL Server and use Windows auth. And of course we tell Swarm to use a gMSA.

- Again, a Swarm service with only one instance isn’t fun, so let’s scale this

docker scale tst19=2

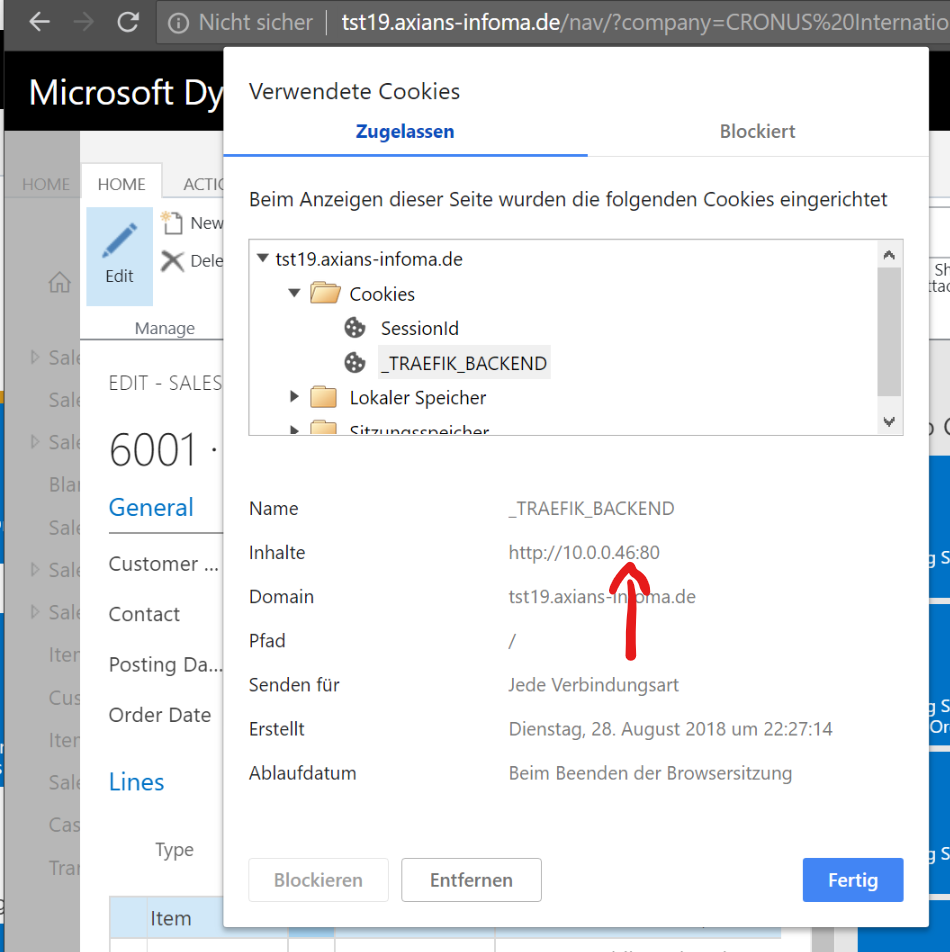

We can then use e.g. Chrome and IE to connect to the WebClient at – in my case – http://tst19.axians-infoma.de/nav and make sure we have two different requests going to two different instances. If we look at the cookies, we can see that Traefik is routing to different backends

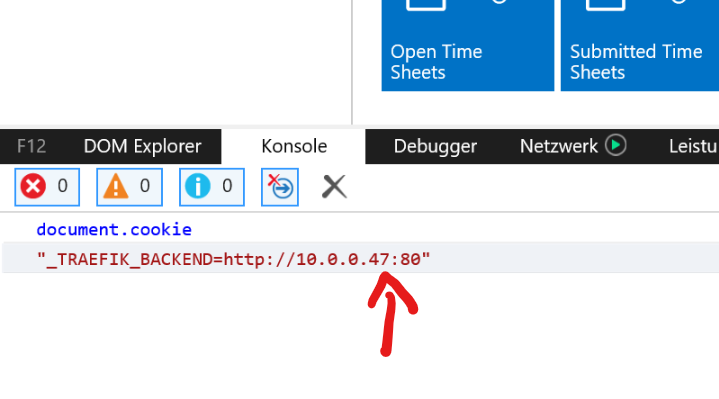

The cookie in the IE dev tools console:

And the cookie in the Chrome URL bar:

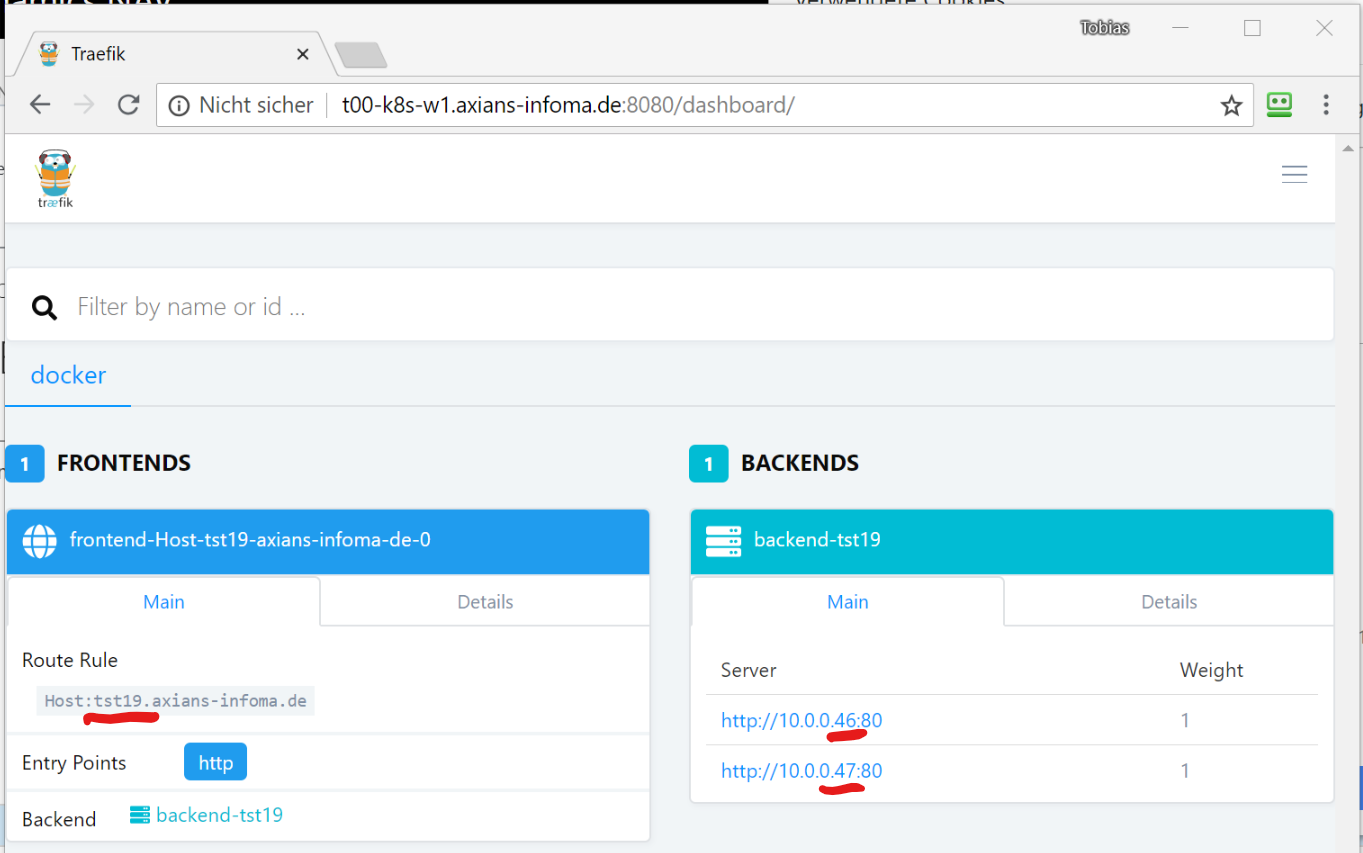

This corresponds with the Traefik dashboard which is reachable on the Manager node, port 8080 and shows us the frontend (where users connect) and the backends (our service instances)

With that we have a dynamically scalable NAV environment as a Docker Swarm, using Windows authentication to connect to the WebClient. The Windows Client is a bit trickier because of the network protocols it uses, but that might be a topic for another post.

- Typically in Docker Swarm you would use something called an ingress overlay network, but unfortunately that is not yet available for Windows Docker hosts

- In WSL, because that really is fun. Sorry if I am confusing you with that, but there is an everlasting Linux core in me :)

- If that isn’t a flashy headline, I don’t know what is

RSS Feed

4 Kommentare zu “Bringing it together: Windows auth in a NAV/BC image in a Docker Swarm”

Leave a Reply

You must be logged in to post a comment.

Tobias, we did a similar experiments, but leave this idea aside as I did not find any solution which could proxy RTC TCP (WCF) traffic. So now looking into Kubernetes and already got a good workable prototypes. Kubernetes are able to spin up a public IP and then proxy traffic to internal BC container IP address. Could arrange a demo for it.

Hi Vlad,

we actually have a working solution in place, so the only question is whether my colleague working on this will blog about it or not 🙂 Kubernetes of course is very interesting but as a lot of our customers and therefore we are still relying a lot on Win Auth (in Web and Win Client), Kubernetes is currently not a fully valid option. Or did I miss something and this became possible in Kubernetes?

See https://www.axians-infoma.de/navblog/load-balancing-navbc-in-docker-swarm/ for details on our working solution with a customized proxy which also supports Windows authentication 🙂

Things have moved on a lot since this original post but it still seems to be relevant.

We have a mixed-OS (Linux/Windows) docker swarm with Traefik and have successfully routed to Windows containers which have gMSA credential-specs injected. And, these same containers when accessed by host-published ports will prompt and validate AD credentials correctly.

However, we have been unable to get Traefik routing to these Windows containers with Windows auth working, presumably because of NTLM-style auth which requires some kind of TCP handshake. Perhaps the new TCP-style routers on Traefik 2.0 will fix this but they would seem to require declaring a new dedicated hostname for our Traefik cluster.

Do you know any different? Have you been able to route with HTTP via Traefik and still get Windows auth working on Windows containers?